※日本語訳は、タブを切り替えることでご覧いただけます。

Hello everyone! I’m Hikaru Isayama (nickname: sean), a Software Developer Intern at SORACOM.

As a college student from the United States, I am very fortunate to be able to stay at my grandparents’ home in Tokyo for the duration of the internship. However, I find myself worrying about a few things when I leave for the office: What if an accident occurs at home? What if there’s an emergency? How will I be alerted if something goes wrong?

In a two-person household like my grandparents’, these concerns must be common whenever someone leaves the home.

Receiving Notifications During Emergencies

Soracom Cloud Camera Services “SoraCam” is an innovative way to streamline surveillance camera management. SoraCam offers an event-based approach which can be used for a SoraCam compatible device, which can be used instead of the traditional constant monitoring approach.

Constant monitoring of surveillance systems typically requires a human to continuously watch camera feeds and respond to any issues that arise. This approach can be impractical and difficult to maintain, especially during work or school when continuous monitoring is not feasible. An alternative option is to have an AI (Artificial Intelligence) system that is programmed to alert users to significant events or anomalies. However, this approach can be resource-intensive and costly, as it requires the AI to be operational around the clock.

Soracom Cloud Camera Services enables devices like the ATOM Cam 2 to autonomously detect events and send this information to the cloud. By integrating this service with AI, we can optimize resource use and reduce costs, as the AI models are triggered only when event footage is sent to the cloud, ensuring that resources are used more efficiently.

To use the cloud motion detection recording function, it will require a license with motion detection from the Soracom Cloud Camera Services page. The stored recordings can then be exported to your own system using the SoraCam API.

This event-based architecture is highly effective for efficient surveillance management, as well as for reducing operational costs and alleviating the burden on human resources. By leveraging Soracom Cloud Camera Services, it becomes possible to create efficient and secure surveillance systems suitable for various environments, including homes and offices.

For this blog, I decided to use the ATOM Cam 2, a SoraCam compatible product.

Developing a Computer Vision Model for Fall Detection

For us humans, it is very easy to recognize when a person is standing up, is falling down, or is fallen. But for machines and devices, machine learning models, more specifically computer vision models, are required to address such classification problems. Specifically, I used YOLOv8 and Roboflow to develop a machine learning model to be able to classify these states.

YOLOv8 refers to a specific version of the YOLO (You Only Look Once) object detection model. YOLOv8 is a popular series of real-time object detection models known for their speed and accuracy, which I will use to detect humans in the camera footage. Roboflow, on the other hand, is a platform and tool for managing, labeling, and augmenting datasets for machine learning projects, with a particular focus on computer vision tasks like object detection. To see how YOLOv8 and Roboflow work together, try the demo here.

Answering the question of whether a person has fallen or not is also possible with a multimodal LLM (generative AI) such as ChatGPT. However, while ChatGPT excels in general-purpose tasks, it struggles with specific use cases such as tasks that involve learning from or analyzing video files. For example, as it is not yet possible to upload video files to ChatGPT, it cannot analyze the duration a person has been on the ground from an MP4 file. To address these difficulties, I used YOLOv8 and Roboflow, which are better suited for handling video analysis and object detection tasks.

I referenced the steps and information on this page for the implementation of this model.

- Obtaining Data: Using the ATOM Cam 2, I collected approximately 30 seconds of video capturing myself moving around the room, falling down, and then getting up. This video was captured in such a way that specific time periods of “standing,” “falling,” and “falling down” were clearly distinguished. This will allow the model to be trained such that it will be able to label these periods with the videos provided during the testing phase.

- Divide into Frames: From the resulting mp4 video, frames were extracted at a specific frame rate (fps) and each frame was saved as a still image. For this video, I used Roboflow to create around 250 frames at 8 fps.

- Label “standing,” “falling,” or “fallen”: For each frame, I manually labeled with the following states: “standing,” “falling,” and “fallen.” This will help the model “learn” when a person is standing, falling, or is fallen. With this, the dataset is ready to be trained on.

- Train Model using Roboflow: The model is trained using YOLOv8. That is, the model is “taught” to recognize patterns when a person is standing, falling, or has fallen in the video. In this case, each frame of the video becomes a classification problem. To learn, the model learns patterns from the data and is tuned to minimize errors. So the more data it has, the more accurate it will be.

- Model Testing: After training is complete (about two hours), the model is now ready to be tested using any video using Roboflow’s “Visualize” tool. Using this feature, videos can easily be drag-and-dropped or uploaded to freely test the model. The number next to the label is the confidence of the label’s correctness.

The model is now trained and tested, ready to be deployed at any time. In the case that you would like a model with better performance, some options are to increase the size of the dataset by importing more data, or training with a different model.

Implementing a Live Notification Feature

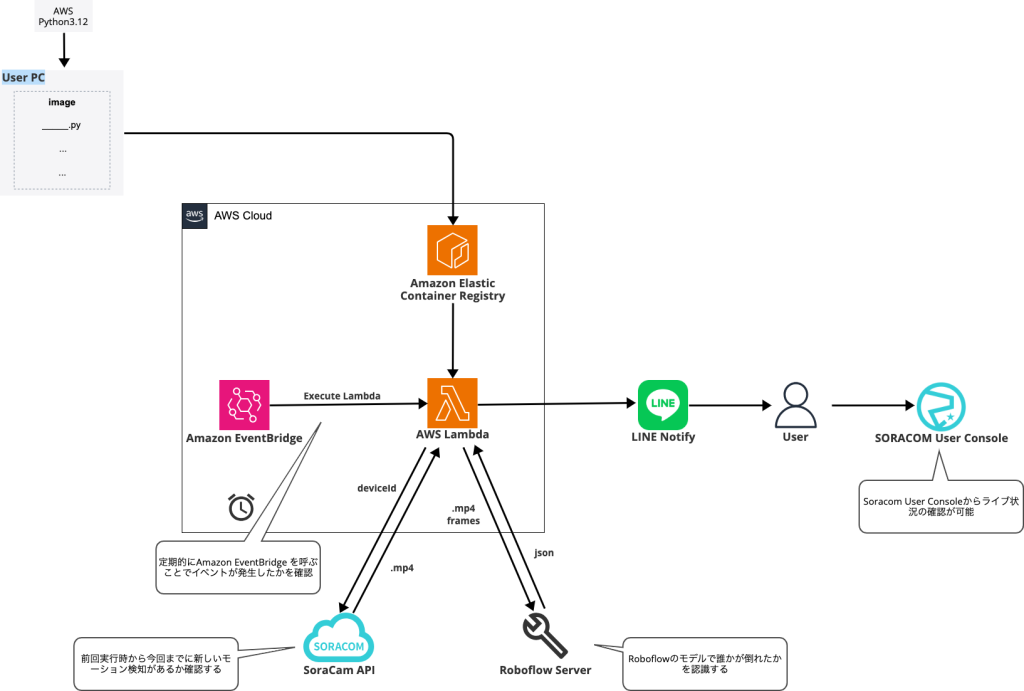

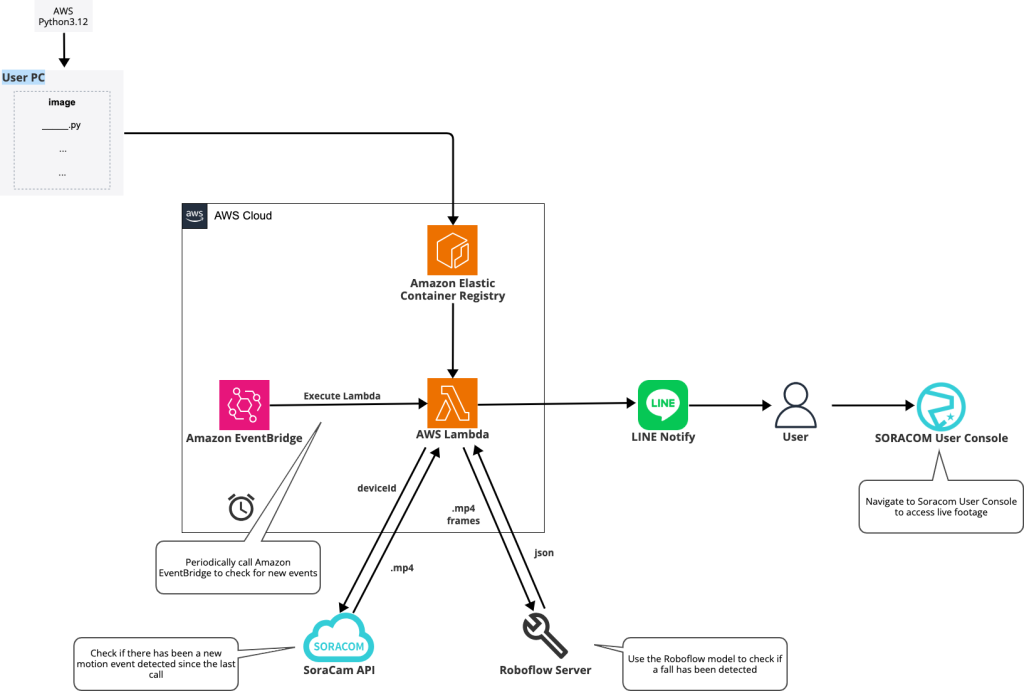

Now that the model has been developed, the next step is to implement the live notification feature. There are many ways to achieve this, but here is the overall structure of the one I will use:

- Amazon EventBridge will be used to periodically call AWS Lambda. With this, we can check whether or not there is a new event captured live. AWS Lambda will then perform the steps 2 to 4 below.

- Using the SoraCam API, we will check if a new event has occurred. Specifically, we will call the following APIs:

- First, we will check for any new motion events using the listSoraCamDeviceEventsForDevice API. If there is an event within a set time before the current time, begin the export process.

- Using the exportSoraCamDeviceRecordedVideo API, we will retrieve the video from the cloud and begin the download process (mp4 file compressed in zip format). This will return the exportId, which is required in using the following API. *NOTE: even if the exportId is obtained, there is a chance that the export has not been initialized at that moment. Therefore, it may be necessary to add a time lag before finalizing the export

- Finally, use the exportId obtained above to use the getSoraCamDeviceExportedVideo API, retrieving a link to download the video from the cloud. It will also check if the export is completed or not with the status property; if it is not completed, call this API again after a few seconds.

- Using the Roboflow library, the videos are split and classified individually. At first, I attempted to run it using the Python 3.12 runtime; but since NumPy is written in C++, it was environment-dependent and did not work well. The size of the Roboflow library was also too large to use AWS Lambda’s Layer, so it was best to use container images, which were managed using Amazon Elastic Container Registry (ECR). Once the results were obtained using the Roboflow library, we implemented logic to classify how if the number of “fallen” tags was only a few it would not be classified as an emergency, and if the number of “fallen” tags continued, it would be classified as an emergency.

- In the case of an emergency, it would then notify the user through LINE. To deploy this model onto LINE, refer to this recipe.

- If necessary, the live status can be checked in the Soracom user console. This makes it easy to check the live video after receiving a message on LINE that a person has fallen.

With this, a safety monitoring system using cloud-based cameras and AI is complete!

Summary

Roboflow’s user-friendly interface allows users with minimal programming experience to easily create computer vision models. By leveraging their own data, users can also tailor models to achieve maximum accuracy for their specific needs. When combined with Soracom Cloud Camera Services, it makes building and deploying a variety of customized applications such as baby monitors or pet cameras very straightforward. For those interested in creating custom datasets or developing models tailored to specific needs, exploring Soracom Cloud Camera Services and Roboflow is a great next step.

Recently, SORACOM Flux, a low-code IoT application builder using AI services, was announced. If there is no requirement to train specific data, and only need to classify images rather than videos, SORACOM Flux provides an alternate and easy way to detect “if someone has fallen.”

Reference: Nihon Keizai Shimbun article

When using SORACOM Flux, the process of answering classification questions about images is much easier as it uses AI services such as GPT-4o. SORACOM Flux is available with a variety of AI models, trained and tuned on massive data sets, making it very effective for a wide range of cases. The SORACOM Flux workflow design tools also makes it possible to easily answer follow-up questions such as “is there an injury?” or “is there a need for medical attention?” after detecting a fall. For more information, read here:

Using YOLOv8 and Roboflow, I was able to manually create a model that can handle my unique use case with high accuracy. This model is also designed to handle video formats and live footage, making it easy to integrate into your own system. Depending on your needs and interests, feel free to choose the tool that best suits your project.

― Soracom Software Development Intern, Hikaru Isayama (sean)